Architecture Analysis

2025-11-24

Recap

- Software design is about creating a context (the architecture) for developers to write code that satisfies our objectives for the system.

- These objectives, in our framework, are the business goals we have for this system (generate revenue, increase users, support data analytics, save citizens time).

- We realize these objectives by understanding our prioritized quality attribute scenarios and architecture drivers for the system (what does performance mean? do we have to support mobile? can we assume this will be in Java?).

Why Analysis?

Software architecture analysis is about assessing a system design (ours, or other people’s) with respect to these goals.

Two objectives:

- Did the design choices support the business goals?

- Given our experience and knowledge, can we have some certainty that this design will “work”?

An important note: we are not trying to get to 100% certainty.

- it probably isn’t possible and

- that would take more money than would make sense (most of the time).

Cost Tradeoffs

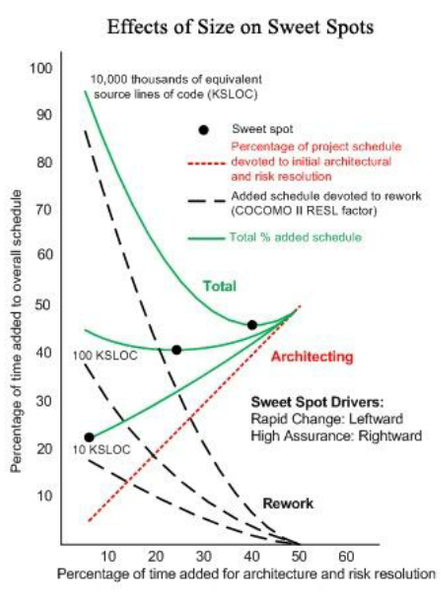

Consider the Boehm curve about up-front analysis vs rework.

Boehm cost tradeoff curve

A week of programming can save an hour of thinking. –Simon Brown

And keep in mind that optimizing for one goal often implies suboptimal solutions for the other goals.

In other words, if you think a change is nothing but beneficial, you might be missing something.

Analysis Techniques

Architecture analysis can be internal; you whiteboard a possible design with some knowledgeable team-mates and assess flaws.

This assumes personal discipline and team psychological safety:

- you are disciplined enough to be truly reflective

- people feel comfortable criticizing each other,

- you can see past your own biases.

External reviews

Analysis can also be external, either with architecture review boards 1, or external analysis teams from consultants.

Analysis Criteria

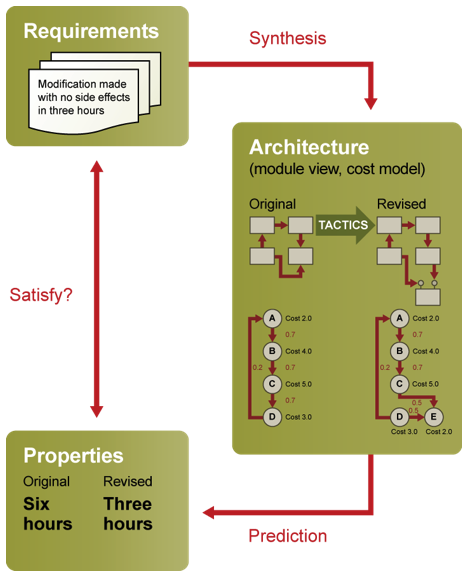

- In nearly every case you will want to have a clear set of criteria by which the design can be said to help or hurt.

- In our framework these are the quality attribute scenarios (QAS) which can act as “tests” for our rough analysis

- A system ‘passes’ the analysis if the QAS’s response/response measure can be achieved (for a given level of confidence).

Architecture Tradeoff Analysis Method

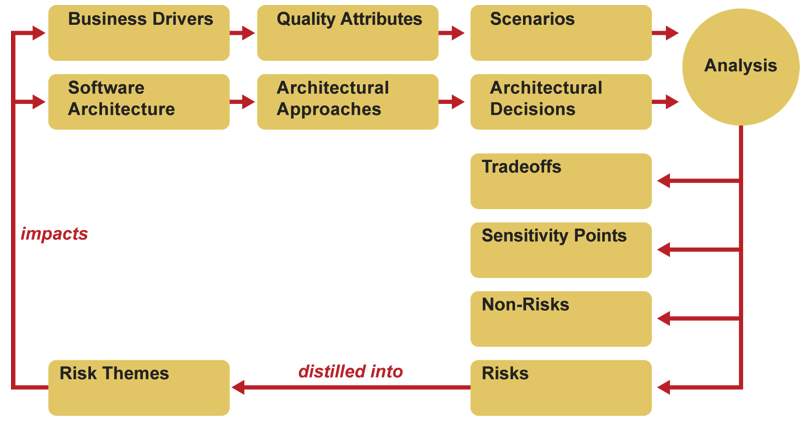

The Architecture Tradeoff Analysis Method (ATAM) is what the textbook presents as one, fairly heavyweight form of architecture analysis.

ATAM workflow

The end goal of an ATAM is a list of possible risks that the system design, as currently stated, is facing.

Once you have identified risks we then prioritize the risks and fix the architecture with redesign/refactoring.

Steps in an ATAM

- Present the ATAM. The consultants explain what is going to happen.

- Present business drivers. The business team/owner show what business priorities exist for the system. This includes important quality attribute requirements and drivers.

Steps (cont)

- Present architecture. The technical team/architect explains the current state of the system. This might be the existing codebase, or some proposed design approaches. Most often the ATAM works best when the design is hypothetical (avoid rework!).

- This presentation should delineate the key architectural approaches. At this point the analysis team will begin probing for risks.

Steps (cont)

- Identify architectural approaches. Following the technical details, specific architectural structures can be identified.

- For example, choice to use a given framework such as Meteor; converting a monolith to microservices; upgrading JVM to v 1.8.

- Generate quality attribute utility tree. From the business priorities, we can now formulate the prioritized scenarios.

- Analyze architectural approaches. Since we have prioritized the scenarios, we start our analysis of the approaches using the most important (HH) scenarios.

Steps (last)

- Brainstorm and prioritize scenarios. These are phase 2 activities: we bring in a wider group of stakeholders and broaden our prioritization and scenario generation.

- Analyze architectural approaches. Repeat step 6 with the new QAS.

- Present results. The analysis team gets together and synthesizes the risks into a manageable list for the clients to digest.

ATAM Outputs

- Risks – potentially problematic architectural decisions.

- Example: business logic is tied up in the SQL triggers, which makes it hard to extract when modernizing.

- Non-risks – good architectural decisions that are frequently implicit in the architecture.

- Example: using Travis-CI for integration testing will help visibility and tool support for testing.

ATAM Outputs

- Sensitivity points – a property of one or more components (and/or component relationships) that is critical for achieving a particular quality attribute response.

- Example: choosing Meteor as a framework makes it possible that Meteor breaking changes will hurt performance.

- Tradeoffs – a property that affects more than one attribute and is a sensitivity point for more than one attribute.

- Example: using 1024 bit encryption will help security but affect performance.

Design Reviews

- the ATAM is geared toward larger, Complex systems (unknowns)

- simpler reviews are usually acceptable in a faster-moving org with a Complicated problem

- e.g., at Google, a project starts with a design document outlining key goals, implementation approach, and tradeoffs.1

Active Reviews of Intermediate Designs

- “timely discovery of errors, inconsistencies, or inadequacies”

- avoid stacks of documents approach

- drive design reviews using scenarios testing the key goals

- don’t assume all relevant stakeholders are available

ARID structure

- 3 teams:

- review team (scribe, facilitator, observers)

- lead designer

- reviewers (stakeholders for design, e.g. devs who will use it)

ARID Process:

- Outline ARID process

- Present design

- Identify scenarios

- Review design

A lightweight ATAM that does not insist on all stakeholders being present. Downside is you are more likely to miss key scenarios and architecture drivers.

Exercise

Take your system documents as evidence of the system’s architecture.

- Pick a QAS for your system.

- Generate 1 possible risk that this system is facing, and

- identify 1 sensitivity point.

- capture this in a simple document/piece of paper, with your names

Summary

A good system architecture plan will support varying levels of analysis.

Analysis allows us to investigate potential problems and risks for a given architecture plan.

The risks can then be added to a risk register or managed (e.g., doing extra investigations).

References

Neil Ernst ©️ 2024-5