Ethics and SE

2025-11-23

Ethics and Software Architecture

- asked to do bad things

- responsibility to people and planet

- AI and ethical behavior

- indigenization and software engineering

Reminder on civility

Ethical questions can be contentious and personal. Please be respectful of other people’s right to speak and learn in this classroom.

Why Care About Ethics?

- right and wrong?

- legal frameworks and penalties?

Ethics topics in SE fpr

- The Tay bot

- Deepfakes

- Skin tone and soap dispensers

- COMPAS

Pretty easy to make “innocent” mistakes when we look at lots of data. Still unacceptable.

Ethics in SE for Data Science

Q: Lots of ethics problems. Which are SE specific?

A: Maybe everything: software is used everywhere?

Using AI for all the things

Trading Accuracy and Fairness

Example: from “Data Science from Scratch” by Grus

Fairness might mean being less accurate. For example, using k-anonymity to hide fine details.

| Prediction | People | Do Action | % |

|---|---|---|---|

| Unlikely | 125 | 25 | 20% |

| Likely | 125 | 75 | 60% |

But … what if we have two groups, A and B

| Group | Prediction | People | Actions | % |

|---|---|---|---|---|

| A | Unlikely | 100 | 20 | 20% |

| A | Likely | 25 | 15 | 60% |

| B | Unlikely | 25 | 5 | 20% |

| B | Likely | 100 | 60 | 60% |

Hidden confounds (or at least, not included in the model) interact with group membership.

Arguments

- 80% of A is unlikely but 80% of B is likely - different predictions across groups.

- It’s fine; unlikely always means 20% chance of action - accurate - labels mean the same.

- But 40/125 people in B (32%) were wrongly predicted to be likely to do something!

- only 10/125 (8%) of A were wrongly predicted - B is stigmatized.

- Vice versa for false “unlikely” labels.

Real world impact

What if “Action” is “chance of passing screening interview”?

What if Group A is “Citizens” and Group B is “non-Citizens”?

What if Group A is “Python users” and Group B s “R users”?

See also “21 Types of Fairness” by Arvind Narayanan.

Collaboration

Who and what do we work with? Surveillance equipment for totalitarian regimes? Face recognition for drones used by a powerful military? Do we hand over user info for any legal request?

Interpretation and Explainability

Can we show why a model arrived at the decisions it does? Does it matter? Compare decision trees to CNNs.

LIME - a tool for interpreting machine learning results.

Recommendations

What should we be recommending? Everything, even if our users are unsavory? What should a company be required to “censor”? (note free speech is usually only applied to government restrictions).

Biased Data

We train our data on datasets that might themselves reflect the existing biases in our world.

- English-language text

- Racially motivated justice system decisions

- Social media posts

We also need to reflect on how well the dataset we trained on reflects the current problems we are interested in!

Data Protection and Privacy

How much individual information is obtainable from the model? How much protection does a user get? Can the model be retrained to forget that person?

Security

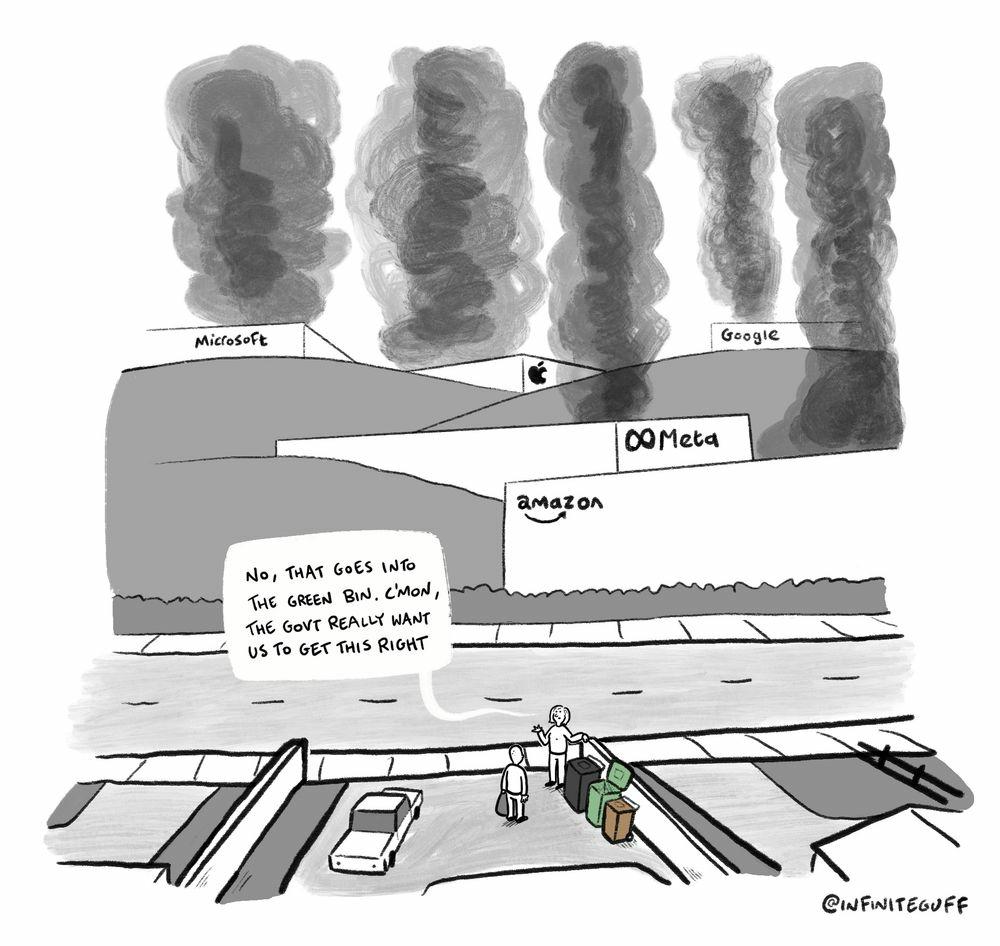

Sustainability

So - what to do

https://newsletter.pragmaticengineer.com/p/asked-to-do-something-illegal-at

This would suck! You have no easy options.

- VW: “please help us cheat on emissions tests”

- FTX: “please help cover up fraud”

FTX

- Talk to a lawyer on how to avoid assisting a crime

- Turn whistleblower.

- Quit the company, ensuring he did not further aid this activity

Neil Ernst ©️ 2024-5